Last year, I teamed up with Lucas Feldmann and Josefine Zerbe to replicate the SGAN network designed by Jetchev, Bergmann & Vollgraf (2016) from Zalando Research. Their SGAN model provides a nice way to generate non-repeating patterns of any texture. In the following ipynb notebook, we describe the model and training results on different textures. After that, we provide another notebook including a Tensorflow implementation of the original SGAN architecture as well as the training procedure that we used to generate our textures. See for yourself and feel free trying out to train the network on your own texture images:

You may also enjoy

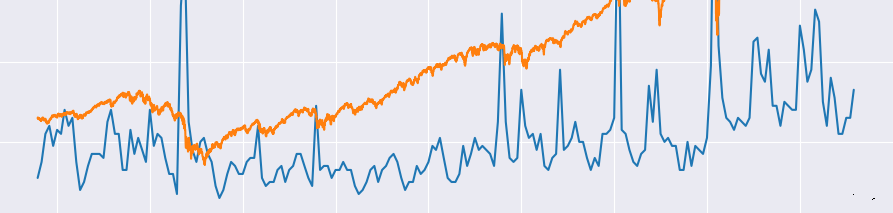

Google Trends meets Yahoo Finance

Investigating the relation of online search and market data.

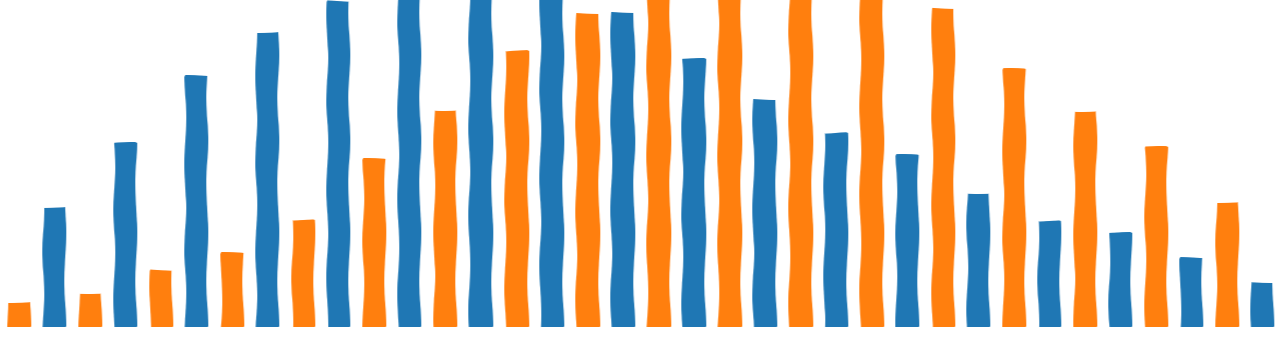

Entropy-based measures in Machine Learning

A collection and explanation of the most relevant Entropy-based measures in Machine Learning.

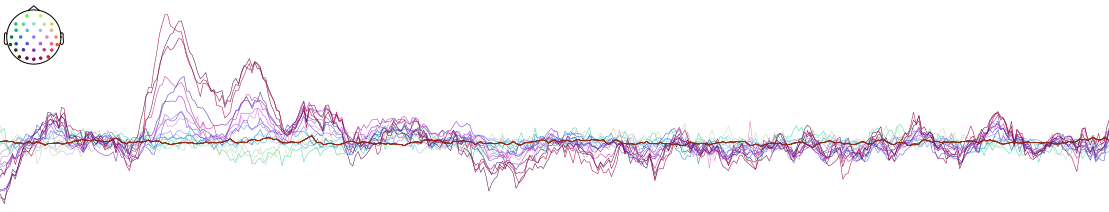

Introducing: ASRpy

Artifact Subspace Reconstruction in Python.

Introducing: CatEyes

Unified Gaze Classification for Eye Tracking.