Over the last years, I have been working a lot with classification of eye tracking data.

I found that there are tons of different gaze classification available out there, but many of them took a terrible amount of installation, implementation, and data wrangling work until ready for application. If you had to apply gaze classification at one point in your career, you might know what I’m talking about. As this problem deterred me and many of my lab members from actually using these algorithms, I decided to unite all these different algorithms in one toolbox, and unite all their functionality into one simple and easily applicable workflow.

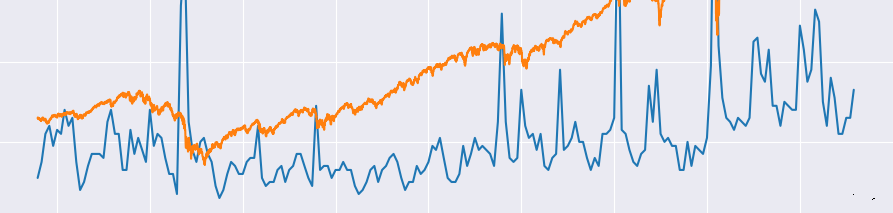

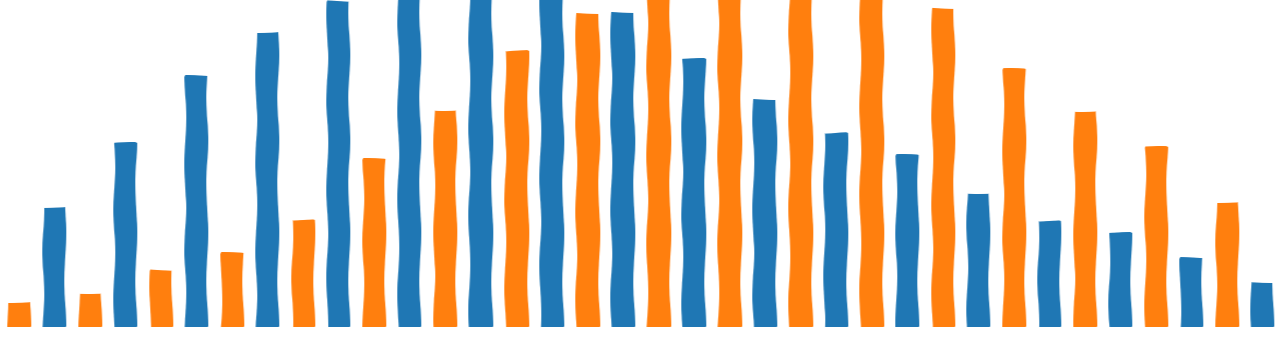

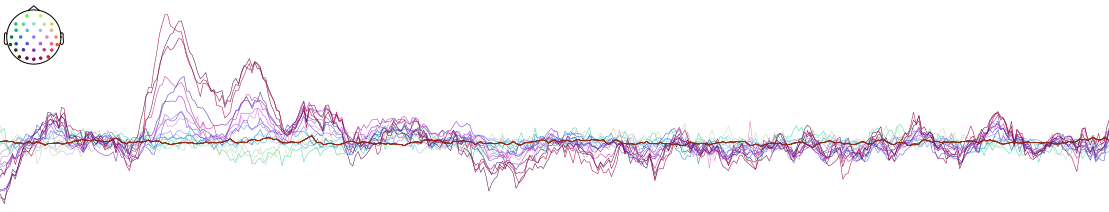

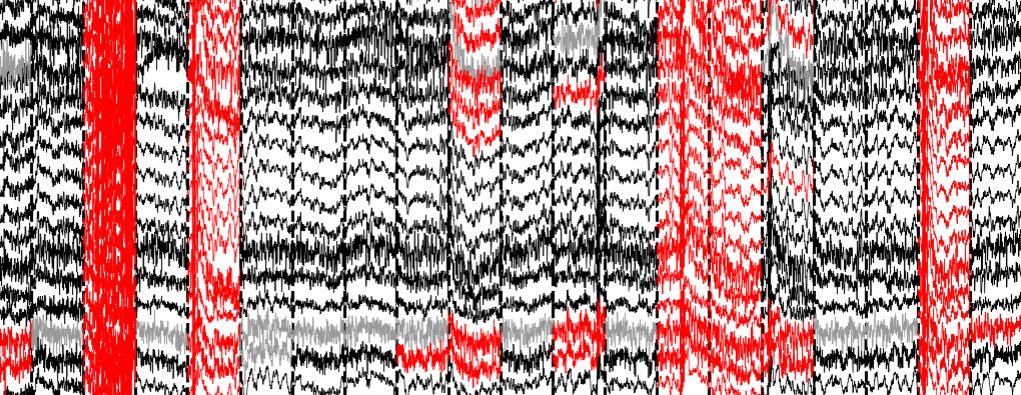

The result of this work is the CatEyes Toolbox. It does not allow you to test different gaze classification algorithms with very simple and standardized functions, it also provides some tools to easily handle and visualize eye tracking data, especially with respect to classification.

If this idea caught your attention, feel free to check out the repository, where you can find everything you need to know about application, installation, as well as practical examples on how to directly apply gaze classification to your specific eye tracking project. Probably the best way to get an idea of how CatEyes works, is by using our CatEyes minimal example. You can inspect the intrdocutory example below, or click on the “Open in Colab” button, to run it in Google Colab’s hosted runtime.

Of course this is by far not everything you can do with CatEyes. If you wonder what other functionality CatEyes provides, you can check out the repository, or have a look at the CatEyes documentation.